Sysadmin Sunday: debugging mDNS issues

This past weekend, I was trying to set up some software on my NAS1 (that's network-attached storage; think "small server with some hard drives attached") and accidentally borked a thing along the way. I thought it would be fun to write up the play-by-play of how I diagnosed and (mostly) fixed the issue—not because this was an especially thorny or illustrative problem, but because I think it shows how a disciplined approach to debugging can be highly effective.

First, let me reiterate that disclaimer:

I am bad at GIMP

With that out of the way, allow me to set the scene: I've just used the physical reset button on the NAS to trigger a

password reset,2 and now I want to open up the web GUI to set a new password. I navigate to the usual

URL in my browser, http://nasgul.local:5000,3 only to be greeted by a lovely "We can't connect to the server" message.

This was working earlier today—what's going on?

There was a time not so long ago for me when such a message would have been a considerable source of consternation, and probably an insurmountable obstacle. But now, with just a little bit more computer networking experience, and some diligent debugging, let's see if we can puzzle our way out.

The (meta) game plan

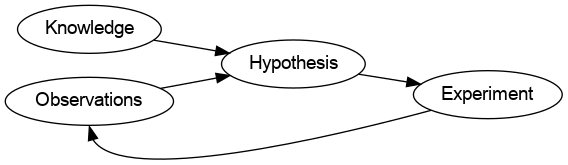

Let's begin by outlining a general approach for tackling this kind of issue. When working through a problem like this, I try to categorize my thinking as follows:

- Knowledge - what facts do I know that are relevant in this situation?

- Observations - what behaviours/outputs am I seeing from the system in question?

- Hypotheses - what plausible conjecture can I make about the system behaviour?

- Expermients - how can I run a test to falsify my working hypothesis?

(Arguably, this should also include an "Assumptions" category. I haven't included it explicitly here, but I'll try to call attention to any assumptions we make—it can be worth writing them down as you go in case you need to revisit them.)

Considering those four categories suggests an algorithm for debugging: use your knowledge and observations to generate a hypothesis; attempt to falsify that hypothesis with an experiment; incorporate the results of the experiment as new observations and repeat.4 (Obligatory: yeah, science!) I won't be dogmatic about sticking to this exact formula 100% of the time, but it will form our basic roadmap.

Debugging algorithm in graph format

Our quest begins

Let's try putting it to use. First up is the problem that we're facing—that's an observation.

Observation: My browser can't connect to http://nasgul.local:5000.

Knowledge: but that was working an hour ago!

Experiment: forget the GUI on port 5000 for a second; can we connect to the host nasgul.local at all?

$ ping nasgul.local

ping: nasgul.local: Name or service not known

Nope.

Observation: seems we can't connect to nasgul.local at all.

Knowledge: I don't have this hostname hardcoded in my DNS server; it's supposed to be resolved by multicast DNS, aka mDNS. I think I recall having had some issues with mDNS before; I'm definitely not a domain expert here.

Hypothesis: the DNS resolution for nasgul.local is failing. (Based on my previous knowledge, I'm highly confident

in this hypothesis, but we should check it just in case.)

Knowledge: I know I've configured my router so that nasgul always gets assigned the internal IP address 192.168.0.201.

(Specifically, I've set up a manual DHCP reservation for it.)

Experiment: Since we know the actual IP address we're trying to reach, let's cut out the DNS middleman and see if we get a response:

$ ping -c 1 192.168.0.201

PING 192.168.0.201 (192.168.0.201) 56(84) bytes of data.

64 bytes from 192.168.0.201: icmp_seq=1 ttl=64 time=2.68 ms

--- 192.168.0.201 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 2.684/2.684/2.684/0.000 ms

As expected, we can actually reach nasgul by IP address. The NAS is alive! That strongly suggests that the NAS itself

is fine, but something is going wrong in our DNS lookup (i.e. the process of mapping nasgul.local to 192.168.0.201).

Hmm… now what?

Well, there's one more important technique that I've been keeping in my back pocket: flailing.

I know, I know! It doesn't sound good. In fact, it sounds like the opposite of the "diligent debugging" I've been

cramming down your throat. But trust me—used effectively, flailing can be the quickest way to make progress; it's

a very important tactic to have in one's toolbelt. I define "flailing" as quick, cursory searches where you have a

general idea of what a next step might involve, but you don't yet have a perfectly-formulated question or an

experiment to run. For me, flailing usually involves a flurry of web searches (Wikipedia, StackOverflow, and the Arch

Wiki are all usually good hits), scanning a man page (often searching for a particular

option or theme), or even just using the humble

apropos command to find a relevant tool.

Caveat: the effectiveness of flailing drops off rapidly as a function of time—if you're flailing for more than, say, ten minutes, it's probably best to take a step back and re-evaluate your situation more methodically. If you're still stuck, you might be better off trying to talk to a friend/colleague, or intentionally investing the time to gain more foundational knowledge (e.g., reading a man page in full, or finding a relevant reference book.)

In this case, since I suspected an mDNS issue, the relevant Wikipedia page was definitely part of my flailing, which turned up some useful tidbits and triggered a related memory:

Knowledge (from scanning the mDNS Wikipedia page):

- To resolve a hostname via mDNS, the client blasts a packet to the local network via multicast; the target host then

also replies via multicast (e.g., "Yes, I'm

nasgul.localand I live at192.168.0.201"). - mDNS messages are UDP packets sent to the IPv4 address 224.0.0.251 on port 5353.5

Knowledge (vaguely recalling a Julia Evans blog post6): I could probably use tcpdump to inspect

traffic on my laptop. Man, I should probably learn how to use that properly someday.

Knowledge: I'm pretty sure the other server on my home network, treebeard, advertises itself over mDNS.

Hmm… so it seems that for this work, my laptop needs to send the mDNS request to my local network, and then

nasgul needs to reply to that packet. That suggests we should test the following:

Hypothesis: at least one of the following mDNS processes is not working correctly:

- The "client" side (i.e. my laptop is not correctly sending the appropriate mDNS query)

- The "server" side (i.e.

nasgulis not responding to relevant mDNS queries)

Experiment: can my laptop resolve a different hostname via mDNS?

$ ping -c 1 treebeard.local

PING treebeard.local (192.168.0.200) 56(84) bytes of data.

64 bytes from local.simpsonian.ca (192.168.0.200): icmp_seq=1 ttl=64 time=0.219 ms

--- treebeard.local ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.219/0.219/0.219/0.000 ms

This looks good, but it isn't conclusive—nothing here proves my laptop resolved treebeard.local via mDNS, but

based on my recollection of how that's set up, I think that's the most likely explanation. Our efforts will probably be

better invested in checking up on our partner in this lovely mDNS tango (item #2 in our hypothesis above).

So, next thing to investigate: is nasgul actually replying to our mDNS queries? This one's a little trickier; if

nasgul isn't currently replying, then we're trying to show the absence of something. However, the basic mDNS info we

gleaned from the Wikipedia page suggests a potential angle of attack—if we could monitor all outgoing/incoming

traffic on port 5353, we should be able to see whether the mDNS packets we expect to see are actually being exchanged.

Experiment: is nasgul responding to mDNS queries?

So, uh, how do we actually do that? Well, my gut instinct is that tcpdump can probably do this for us. I haven't used

it before though, and I have no idea how it works, so let's flail away… scanning the man page is always a great

place to start; in particular, under the EXAMPLES section I spy the following:

To print all IPv4 HTTP packets to and from port 80, i.e. print only packets that contain

data, not, for example, SYN and FIN packets and ACK-only packets. (IPv6 is left as an exer‐

cise for the reader.)

tcpdump 'tcp port 80 and (((ip[2:2] - ((ip[0]&0xf)<<2)) - ((tcp[12]&0xf0)>>2)) != 0)'

That's more complicated than what we're going for, but two things jump out: the beginning seems to specify TCP only, on

port 80. Based on my reading of the man page introduction, that whole expression is probably a filter pattern; i.e.,

tcpdump will only show packets that match that filter. Without doing any more research, let's just try pattern

matching (by substituting the protocol and port we care about) and see if we get lucky:

$ tcpdump 'udp port 5353'

tcpdump: wlp2s0: You don't have permission to capture on that device

Uhh right, we probably need to be root to listen on a network interface?

$ sudo tcpdump 'udp port 5353'

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on wlp2s0, link-type EN10MB (Ethernet), capture size 262144 bytes

This looks promising! As a sanity test, let's first start with the case where we expect to see some packets:

Sub-experiment: do we see mDNS messages when resolving treebeard.local?

(new terminal)

$ ping -c 1 treebeard.local

PING treebeard.local (192.168.0.200) 56(84) bytes of data.

64 bytes from local.simpsonian.ca (192.168.0.200): icmp_seq=1 ttl=64 time=3.22 ms

--- treebeard.local ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 3.223/3.223/3.223/0.000 ms

(tcpdump terminal)

14:12:28.614064 IP6 laptop.mdns > ff02::fb.mdns: 0 A (QM)? treebeard.local. (33)

14:12:28.614156 IP laptop.mdns > 224.0.0.251.mdns: 0 A (QM)? treebeard.local. (33)

14:12:28.668471 IP local.simpsonian.ca.mdns > 224.0.0.251.mdns: 0*- [0q] 1/0/0 (Cache flush) A 192.168.0.200 (43)

Nice! This is a much stronger confirmation than our previous experiment: just eyeballing the text, it seems that my

laptop sent its query on IPv4 and IPv6 (given that there's one line for IP and a second for IP6), and my home server

treebeard quickly replied with its local IP address, 192.168.0.200. Presumably we could decipher what each part of

every line means by studying the mDNS protocol a bit more, but this already looks compelling enough to me.

Observation: it appears that when communicating with a correctly-configured host, my laptop is able to resolve an mDNS query.

Finally, let's return to our original experiment to see if nasgul will reply:

(new terminal)

$ ping -c 1 nasgul.local

ping: nasgul.local: Name or service not known

(tcpdump terminal)

14:18:56.071581 IP6 laptop.mdns > ff02::fb.mdns: 0 A (QM)? nasgul.local. (32)

14:18:56.071738 IP laptop.mdns > 224.0.0.251.mdns: 0 A (QM)? nasgul.local. (32)

14:18:57.072640 IP6 laptop.mdns > ff02::fb.mdns: 0 A (QM)? nasgul.local. (32)

14:18:57.072783 IP laptop.mdns > 224.0.0.251.mdns: 0 A (QM)? nasgul.local. (32)

14:18:59.073621 IP6 laptop.mdns > ff02::fb.mdns: 0 A (QM)? nasgul.local. (32)

14:18:59.073776 IP laptop.mdns > 224.0.0.251.mdns: 0 A (QM)? nasgul.local. (32)

Right away, this looks very different! Our DNS lookup still appears to be failing, even though we can see my laptop is sending mDNS queries. In fact, it's now sending the same query several times—probably due to some kind of retry mechanism when it doesn't hear back? With this new insight, let's pause for a moment and take stock of our situation.

Observation: nasgul does not seem to be replying to mDNS queries.

Honestly, that's not entirely surprising? I wouldn't expect a computer to reply to mDNS queries by default; presumably,

one needs to run some software to handle that responsibility. The only reason this is unusual is that this whole setup

used to work. So if nasgul is operating mostly normally (evidence: we can ping it), but it isn't responding to mDNS

queries, maybe… the software to respond to those queries isn't working?

Hypothesis: something is wrong with the software on nasgul responsible for replying to mDNS queries.

Knowledge (random memory): I think that on most Linux systems, Avahi is used to respond to multicast DNS. I seem to

remember installing it on treebeard myself.

Okay, this is a little open-ended, but still tractable: let's check out nasgul and see if we can find any software

(maybe Avahi?) that could theoretically resolve mDNS queries.

Time to open up an ssh session and flail some more:

$ ssh admin@192.168.0.201

admin@nasgul:/$ apropos mdns

-sh: apropos: command not found

Ah. I guess I should've expected that the Synology-provided OS probably isn't a standard Linux distribution with all the

goodies I'd expect. For now, let's just assume that if nasgul is running any mDNS software, it's Avahi, and

see where that guess takes us.

Experiment: is there already an Avahi daemon running?

admin@nasgul:/$ ps aux | grep [a]vahi | wc -l

0

Guess not.

So: nasgul probably has some software installed to respond to mDNS queries (since this worked before), but we don't

know what it is, or why it isn't working. Back to flailing, I guess?

Scattershot internet searches for terms like "Synology" "mdns" "avahi" eventually bring up a blog

post

that involves mucking around with the internals of a Synology NAS. The details of the post aren't relevant to me, but a

few mentions of Avahi catch my eye—especially the command /usr/syno/etc/rc.d/S99avahi.sh stop. That certainly

looks like a script related to running Avahi! I don't have that exact script on nasgul, but there is something

similar… let's take a look.

admin@nasgul:/$ less /usr/syno/etc/rc.sysv/avahi.sh

-sh: less: command not found

admin@nasgul:/$ # D'oh--remember kids, "less is more"

admin@nasgul:/$ more /usr/syno/etc/rc.sysv/avahi.sh

[...]

admin@nasgul:/$ wc -l /usr/syno/etc/rc.sysv/avahi.sh

287 /usr/syno/etc/rc.sysv/avahi.sh

admin@nasgul:/$ tail -n 31 /usr/syno/etc/rc.sysv/avahi.sh

ServName="`/bin/hostname`"

# make sure $AVAHI_SERVICE_PATH exists

if ! [ -d $AVAHI_SERVICE_PATH ]; then

/bin/mkdir -p $AVAHI_SERVICE_PATH;

fi

case "$1" in

avahi-delete-conf)

CheckServices

;;

afp-conf)

AddAFP $ServName

$SYNOSDUTILS time-machine --afp-status

;;

smb-conf)

AddSMB $ServName

$SYNOSDUTILS time-machine --smb-status

;;

bonjour-conf)

AddBoujourPrinter $ServName

;;

ftp-conf)

AddFtp $ServName

;;

sftp-conf)

AddSftp $ServName

;;

esac

exit 0

Hmm… it's a ~300 line script that defines a bunch of functions, and seems to run some of those functions based on

the argument you give it. The only relevant choice seems to be avahi-delete-conf, which is not especially promising. I

must confess: out of desperation, I ran this script without any argument just to see what would happen. It didn't seem

to do anything (assuming the case statement at the end is the entry point, we just fall right through), but it's a bad

idea to run a script you don't fully understand: there's no telling how you might affect the system you're investigating

without realizing it! (For instance, what if running that script started up a new background process without announcing

it?)

After staring at the name of the file a little longer, and seeing various references to *.service entries in the

script, something clicked in my memory:

Knowledge: Oh, right! Pretty much every Linux system has some kind of "init" system that is usually responsible for starting up long-lived services (like the Avahi daemon, presumably). Systemd is a common choice for such a system these days, but there are others too. (I think "SysV" is another one of these that used to be popular, and I see that in the script name.)

Observation: some of these files I see on nasgul look like they could be related to init scripts.

Hypothesis: if Avahi was running before, it was probably controlled by some kind of init system. If that's true, can we use the init system to get Avahi running again?

Experiment: Systemd is popular and I (kinda sorta) know how to use it, maybe we'll get lucky?

admin@nasgul:/$ systemctl status avahi

-sh: systemctl: command not found

Eh, back to flailing—hey internet, got anything for "synology dsm init service"? Ooh, nice! Majikshoe's House of

Mediocrity has a post Synology NAS - How to make a program run at

startup, which claims that Upstart is the init service in use. Sure enough, there's a file /etc/init/avahi.conf, which matches where the post says Upstart scripts are typically placed. A quick check of Upstart's documentation gives us the command initctl:

admin@nasgul:/$ initctl list | grep avahi

avahi stop/waiting

Now we're talking! It seems that Avahi is installed, and known to the init system—could it be as easy as just restarting it ourselves?

admin@nasgul:/$ initctl start avahi

initctl: Rejected send message, 1 matched rules; type="method_call", sender=":1.93" (uid=1024 pid=2660 comm="initctl

start avahi ") interface="com.ubuntu.Upstart0_6.Job" member="Start" error name="(unset)" requested_reply="0"

destination="com.ubuntu.Upstart" (uid=0 pid=1 comm="/sbin/init ")

Yuck—there's a lot of data in that message, but I can't make much sense of it. At least the start of the message

looks pretty distinctive; let's search for that exactly ("Rejected send message, 1 matched rules") and cross our

fingers…

StackOverflow to the rescue! With the blindingly obvious reminder that yeah, you probably do need to be root to mess with running services. One more time:

admin@nasgul:/$ sudo initctl start avahi

avahi start/running, process 26222

Could it be…‽

Hypothesis: with Avahi up and running, our original problem should be solved.

Experiment: rerunning our earliest test:

$ ping -c 1 nasgul.local

PING nasgul.local (192.168.0.201) 56(84) bytes of data.

64 bytes from 192.168.0.201 (192.168.0.201): icmp_seq=1 ttl=64 time=0.962 ms

--- nasgul.local ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.962/0.962/0.962/0.000 ms

Huzzah! And now the web GUI at http://nasgul.local:5000 loads as expected as well. We're done!

Recap

So: how did we fare overall? Not bad, I think. Obviously someone more experienced could skip some of my flailing (e.g.,

pulling out tcpdump right away, identifying the init system more quickly/directly—like

this),

but I think the big takeaway here is that we didn't need decades of knowledge and know-how to figure it out: all it

took was some awareness of a few fundamental concepts (e.g., the basic mechanics of mDNS, how long-running processes are

usually managed) and a disciplined, stick-to-it attitude while debugging.

One tactic notably omitted from this episode is locating and analysing log files (start by looking under /var/log!)—those are usually a trove of

essential information, but we managed to get by without them.

It's also worth pointing out that we haven't completed a full root cause analysis. In particular, I still don't know why the Avahi daemon stopped working in the first place.7 If we were working on a critical production system, stopping here would be premature: yes, we've addressed the immediate symptom, but how do we know we've really fixed the underlying problem?

Addendum: how can one get better at this?

I've found these kinds of "hands-on" skills to be really useful in a standard software engineering role, but I doubt you'll find a course that covers them in a typical CS degree; this is more hands-on/experiential knowledge than it is academic. So what do you do if you want to hone these skills? Up until recently, my only suggestions would have been "set up some computers at home for fun and do stuff with them" (still highly recommended!) or "try to pick it up on the job," but a recent Hacker News post offers an alternative. Just like how many online services let you practice interview-style programming questions, SadServers.com provides a suite of "real-world" debugging challenges. You're dropped into a VM with some kind of problem (e.g., "why isn't the web server showing the index page?") and tasked with fixing it yourself. I've only done a few, but they were often (painfully) realistic, and lots of fun. I recommend the "Karakorum" scenario in particular if you want to stretch your neurons a bit.

Until next time, may your flailing be ever fruitful.

To be exact, I was trying to install Syncthing on my Synology DS216se. If you're

in the same boat, I recommend downloading the appropriate package from the SynoCommunity

page (in the Synology web GUI, look at "Control Panel" > "Info Center" and

use "DSM version" / "CPU" to figure out what version you need—it was 6.1 armada370 in my case) and installing it

manually. I went with this suboptimal approach after a couple others failed: 1) in theory, one should be able to add

that SynoCommunity feed as a "Package Source", but that didn't work for me. 2) Upgrading the OS to DSM 7 might've made

it easier to install Syncthing, but that seemed like more effort than it was worth, and cursory searches suggested the

recommended RAM for DSM 7 is 4x what's in my NAS. Why didn't I subject issue 1) to our rigorous debugging algorithm?

Well… when that failed, the only feedback I got was an unhelpful message in the GUI. We need more data than that

for a good investigation, and I didn't want to spend time tracking down where (if anywhere) the NAS logs more detailed

error information.

Side note: I have no idea why I needed to do this. This happened soon after I set up Syncthing. When I was choosing a username/password for Syncthing, I think I overwrote the general NAS admin password in my password manager by accident, but a) I don't recall having done anything on the NAS itself that should've triggered changing the password (messing up your password manager shouldn't have any impact on the websites you use it with!), and b) after this point, neither the old admin password nor the Syncthing password worked. Spooky.

I know, I know; right now I don't have HTTPS support working on the NAS. But that shouldn't really matter unless someone was snooping on the wireless traffic in my home. And you'd have to be seriously paranoid to give that even a moment of consideration… right?? (I'll get to it one day.)

Also, yeah, if you read any post involving treebeard, you might be picking up on a

theme with the hostnames. Embarrassingly, I wouldn't even call myself a Lord of the Rings fan per se, but my wife

christened treebeard first and thus set the precedent. After that, I can't not dub the NAS nasgul.

Yes, until you've figured out the issue; apologies if my inexactitude has trapped your brain in a busy loop.

Cute, the standard DNS port twice; that'll make it easier to remember for next time.

Looking this up after the fact, check out the free "zine"

she published that gives a super quick and useful introduction to tcpdump. I should start including her material in

my go-to flail resources!

Just for fun, afterwards I did pull the relevant log file, /var/log/upstart/avahi.log, which is mostly

filled with sporadic but identical failures of the form:

2022-11-06T15:53:18-0500 cname_load_conf failed:/var/tmp/nginx/avahi-aliases.conf

That doesn't mean anything in particular to me (other than noting that nginx is a web server and CNAME is a DNS record type?), but it would be a good first clue if we wanted to continue searching for the root cause.